Jeff Bezos must really be getting tired of these headlines coming up all the time. It seems that their facial recognition software (known as Rekognition) has been subjected to yet another test and come up a little short. Or a lot short, particularly if you happen to be one of the more than two dozen state lawmakers who showed up as hits matching them against a database of known criminals. But hey… when you’re making omelets you’ve got to crack a few eggs, right? (CBS San Francisco)

A recent test of Amazon’s facial recognition technology reportedly ended with a major fail, as some state lawmakers turned up as suspected criminals.

The test performed by the American Civil Liberties Union screened 120 lawmakers’ images against a database of 25,000 mugshots. Twenty-six of the lawmakers were wrongly identified as suspects.

The ACLU said the findings show the need to block law enforcement from using this technology in officers’ body cameras. Meanwhile, supporters of facial recognition say police could use the technology to help alert officers to criminals, especially at large events.

As usual, let’s get the obvious joke out of the way first. If the software is identifying California legislators as criminals, honestly… how broken is it really? (Insert rimshot gif here.)

Getting back to the actual story, the first thing to note is that the “test” in question was performed by the American Civil Liberties Union (ACLU). At least that’s how it’s phrased in the CBS report. Last I checked, they weren’t a software development firm, so did they really make up and perform the test themselves or shop the job out to a firm with more direct experience? I’d like to see the details.

Of course, the results aren’t that suspect. Of all the facial recognition software out there that we’ve looked at, Amazon’s seems to be the one that winds up producing the most spectacular (and frequently hilarious) epic fails when put to independent testing. In that light, perhaps the ACLU wasn’t off the mark.

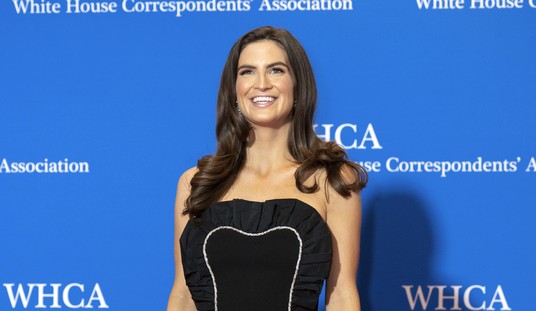

Of course, the ACLU isn’t looking to improve the technology. This test was run so they can continue their campaign to prevent law enforcement from using the software. Democratic Assemblymember Phil Ting of San Francisco (who was tagged as a felon) is quoted as saying, “While we can laugh about it as legislators, it’s no laughing matter if you are an individual who is trying to get a job, for an individual trying to get a home. If you get falsely accused of an arrest, what happens? It could impact your ability to get employment.”

These types of scare tactics are all too common and should be derided. I’ve asked multiple times now and am still waiting for an answer to one simple question. Does anyone have evidence of even a single instance where someone was misidentified by facial recognition and gone on to be prosecuted (or persecuted, as Ting suggests) because the mistake wasn’t discovered? I’ve yet to hear of a case. Did the police show up and arrest Ting after he was misidentified? I somehow doubt it.

Look, the technology is still in its infancy and it’s got a few bugs in it. They’re working them out as they go. Eventually, they’ll get it up to speed and the error rates should drop down to acceptable levels. And if this software can help catch a suspect in a violent crime in a matter of minutes or hours rather than days or weeks after they were spotted by a security camera, that’s a tool that the police need to have.

Join the conversation as a VIP Member