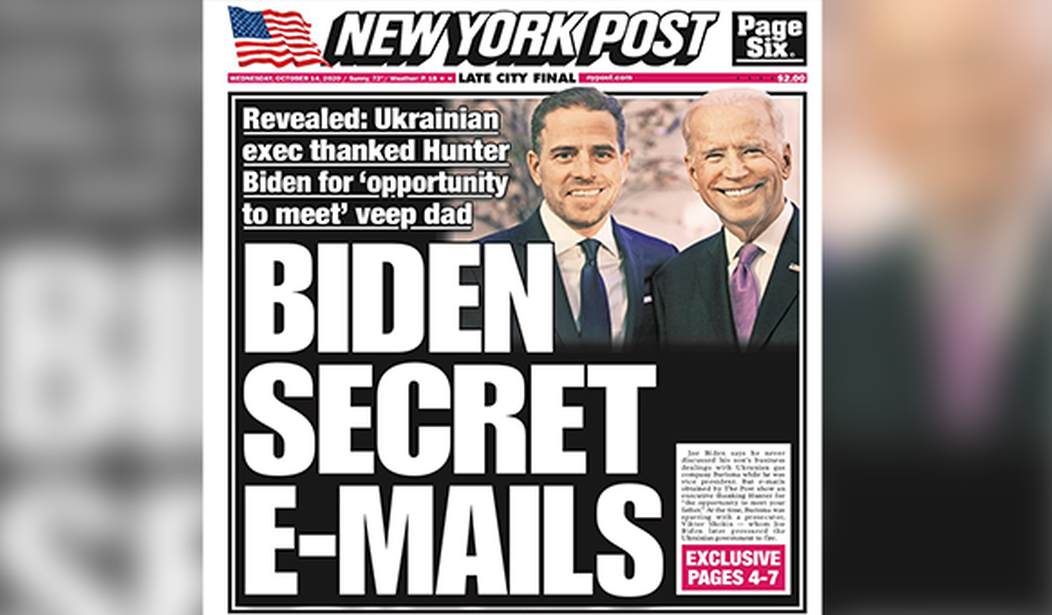

Ben Smith reported in yesterday’s edition of the NY Times that a group of top journalists are having weekly zoom meetings with Harvard academics from the Shorenstein Center on Media. The idea of the meetings is to discuss “misinformation” but right from the start, there’s cause for concern about what new conclusions are being reached about that topic. One example used as a point of discussion in the zoom meetings was the handling of the Hunter Biden laptop story.

The news media’s handling of that narrative provides “an instructive case study on the power of social media and news organizations to mitigate media manipulation campaigns,” according to the Shorenstein Center summary.

As Smith points out, the laptop story may have been an attempt at “media manipulation” in the strict sense that any October surprise story pushed by a campaign (on the right or left) is an effort to sway the media’s coverage. But that doesn’t mean the story itself is “misinformation.” Indeed, there’s pretty strong evidence that many of the emails and photos highlighted as part of the Hunter Biden laptop story are real.

So what exactly are the lessons Harvard’s Shorenstein Center thinks journalists should take from the media’s handling of that story? Is it that social media sites can coordinate to block “misinformation” or is it that the term “misinformation” is just a buzzword that, in practice, means news left-wing journalists and academics don’t like. Smith suggests it may be more the latter:

While some academics use the term carefully, “misinformation” in the case of the lost laptop was more or less synonymous with “material passed along by Trump aides.” And in that context, the phrase “media manipulation” refers to any attempt to shape news coverage by people whose politics you dislike. (Emily Dreyfuss, a fellow at the Technology and Social Change Project at the Shorenstein Center, told me that “media manipulation,” despite its sinister ring, is “not necessarily nefarious.”)

The focus on who’s saying something, and how they’re spreading their claims, can pretty quickly lead Silicon Valley engineers to slap the “misinformation” label on something that is, in plainer English, true.

Joan Donovan, the research director at the Shorenstein Center disagrees with that. She argues that the Hunter Biden laptop story was merely to provoke thought, not to lead to a clear conclusion about truth or falsehood. Maybe so, but even that seems worrisome. The only solid lesson that ought to be taken from that episode is that the media (Facebook, Twitter, etc.) has no business stepping in to throttle which stories can be shared or not because they often don’t know what’s true.

Somehow I don’t think that’s the lesson Joan Donovan is taking from it. In a recent article she co-wrote, Donovan described the need for more regulation of social media content, specifically as it relates to what is true:

Social media is built for openness and scale, not safety or accuracy. Instead, repetition, redundancy, reinforcement and responsiveness are the mechanisms by which content on social media circulates, drives public conversation and eventually becomes convincing — not truth. When users of social media see a claim over and over, across multiple platforms, they start to feel it to be more true, especially as groups interact with it, whether or not it is…

We know that speculation, gossip and rumor are normal aspects of conversation, and it would be impossible to root them all out. But like a garden, social media must be tended so that it can flourish in a healthy way. Because the sheer scale of posts outpaces human ability to moderate content and manipulated media can easily fool artificial intelligence, journalists, researchers, advocates and corporations share the responsibility of safeguarding online spaces.

Again, this desire to regulate content that wasn’t true (or might not be true) is the reason that Facebook and Twitter limited the sharing of the Hunter Biden laptop story. But in the end what they actually did was limit the spread of a story that was substantially true but heavily disfavored by the left in the weeks prior to an election. It shouldn’t be hard to see how that’s a problem. And, to his credit, Ben Smith does see the potential for getting this wrong and even offers a recent example: The discussion of the lab leak theory.

Last April, for instance, I tweeted about what I saw as the sneaky way that anti-China Republicans around Donald Trump were pushing the idea that Covid-19 had leaked from a lab. There were informational red flags galore. But media criticism (and I’m sorry you’ve gotten this far into a media column to read this) is skin-deep. Below the partisan shouting match was a more interesting scientific shouting match (which also made liberal use of the word “misinformation”). And the state of that story now is that scientists’ understanding of the origins of Covid-19 is evolving and hotly debated, and we’re not going to be able to resolve it on Twitter.

The point isn’t that the lab leak theory is true. It may not be. The point is that the scientists who immediately claimed it was “misinformation” were themselves being led by a motivated partisan at the center of the story. Gradually, journalists and other scientists pushed back and noted that, even if it’s not as likely as the natural transfer explanation, the lab leak theory shouldn’t have been summarily ruled out given the lack of evidence either way.

In other words, labeling something misinformation (and thereby labeling some alternative idea or view as fact when the real story is more complicated) may sometimes provide clarity but it may also sometimes be a form of misinformation in its own right. Ben Smith doesn’t quite go this far but I will. The term “misinformation” could quickly become just a better credentialed replacement for the phrase “fake news,” i.e. a vague criticism lobbed by partisans looking to discredit their opponents. If so, popularizing that take among journalists could wind up doing more harm than good.

Join the conversation as a VIP Member