The latest generation of Artificial Intelligence continues to cause problems, particularly in creative circles. The new tools are so easy to adopt that people are hijacking the original photographs and artwork of others, modifying them at will, and republishing. This is frequently done with the original creator receiving no compensation or credit, leading to legal nightmares across the board. But now, in the next stage of the technology wars, some of the biggest camera makers are launching new developments of their own to combat it. New software being deployed by Nikon, Sony, and Canon will assign “digital watermarks” to the images captured by their equipment. This will allow any such images to be checked and verified when people attempt to publish them. Those found to have been faked or altered may then be flagged and rejected. Of course, this adaptation carries potential problems of its own, but it’s still fascinating. (Interesting Engineering)

As fake images become more convincing and widespread, camera makers are fighting back with new technology that can verify the authenticity of photos. Nikon, Sony Group, and Canon are working on embedding digital signatures in their cameras, which will act as proof of origin and integrity for the images.

As Nikkei Assia reports, digital signatures will contain information such as the date, time, location, and photographer of the image and will be resistant to tampering. This will help photojournalists and other professionals who need to ensure the credibility of their work. Nikon will offer this feature in its mirrorless cameras, while Sony and Canon will also incorporate it in their professional-grade mirrorless SLR cameras.

The three camera giants have agreed on a global standard for digital signatures, which will make them compatible with a web-based tool called Verify. This tool, launched by an alliance of global news organizations, technology companies, and camera makers, will allow anyone to check the credentials of an image for free. Verify will display the relevant information if an image has a digital signature. If artificial intelligence creates or alters an image, Verify will flag it as having “No Content Credentials.”

One of the immediate concerns that comes to mind when considering this is the issue of digital security and privacy. If you own one of these cameras and want to participate in this security program, you’re going to be stamping every one of your photos with a lot of information, including your name and your location when you were taking the photographs. If that information can be embedded, it can be hacked. Perhaps not immediately, but sooner or later it will be inevitable.

Also, the level of security depends entirely on how many actors (good and bad) are participating. If someone fakes or alters an image and attempts to upload it anywhere, the people accepting it will have to be using the new “Verify” verification system to test the digital watermark. If they aren’t doing that, then there is no level of protection. I have to wonder how many people will really go through the bother unless the process can be entirely automated.

Stop and consider how many images are uploaded to sites like Instagram and Twitter on a daily basis. It’s being reported that there are currently an average of 95 million photos and videos being uploaded to Instagram daily. What would it do to their processing infrastructure to have to scan every one of them with a new verification system before publishing? And even then, they would only catch the ones that had been originally taken on one of the sponsoring companies’ equipment and then later modified by others. I’m not saying that this isn’t a problem and that it’s not worth at least trying, but it clearly seems problematic.

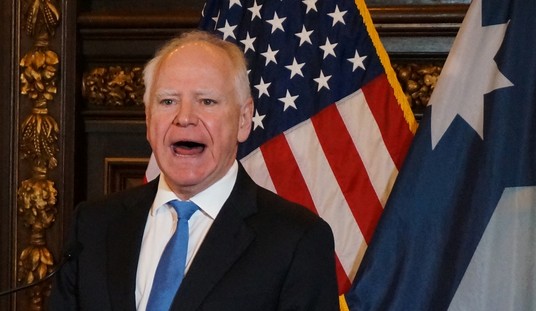

The further we get away from traditional film and paper document publishing, the more complicated things become. As we’ve said here repeatedly, the AI genie is out of the bottle and it’s not going back in. We live in a reality where deep fakes and AI-generated images that are not based in reality exist. Sadly, that may simply mean that we all have to become more skeptical consumers of photo and video content from now on and try not to leap to conclusions without looking for verification from trusted sources. When another photo of Donald Trump being “arrested” surfaces, perhaps someone should call Trump’s staff first and check before flying off the handle.

Join the conversation as a VIP Member