One of the dirty secrets in science is that there is such a large premium for finding “interesting” results that scientists sometimes fudge the rules.

Actually, often. So often that scientists have a term for it that has even leaked out into the general population: “p-hacking.”

P-hacking describes using a statistical technique to tease questionable or spurious correlations out of a data set until you find something “interesting.” In an honest world scientists will propose a hypothesis, develop as good a data set as possible (very very hard, usually), and test the hypothesis using standardized and well-understood techniques. Lots of correlations exist, some causal, many random.

Statisticians have some basic rules about how to determine the likelihood that a correlation is significant, and the variable is called “p.” P stands for “probability.” A high “p” doesn’t prove anything, but it does indicate that there is a possible causal relationship between two variables.

P-hacking is just trolling for things with a high “p.” The relationship can be spurious, or not. That is why you start with an hypothesis to test. You already suspect there is a causal relationship. You can, for instance, test the relationship between vaccines and disease.

Suppose you’re testing a pill for high blood pressure, and you find that blood pressures did indeed drop among people who took the medicine. The p-value is the probability that you’d find blood pressure reductions at least as big as the ones you measured, even if the drug was a dud and didn’t work. A p-value of 0.05 means there’s only a 5 percent chance of that scenario. By convention, a p-value of less than 0.05 gives the researcher license to say that the drug produced “statistically significant” reductions in blood pressure.

Journals generally prefer to publish statistically significant results, so scientists have incentives to select ways of parsing and analyzing their data that produce a p-value under 0.05. That’s p-hacking.

“It’s a great name—short, sweet, memorable, and just a little funny,” says Regina Nuzzo, a freelance science writer and senior advisor for statistics communication at the American Statistical Association.

P-hacking as a term came into use as psychology and some other fields of science were experiencing a kind of existential crisis. Seminal findings were failing to replicate. Absurd results (ESP is real!) were passing peer review at well-respected academic journals. Efforts were underway to test the literature for false positives and the results weren’t looking good. Researchers began to realize that the problem might be woven into some long-standing and basic research practices.

In any case, all this is my explanatory run-up to an amusing case of p-hacking so obvious as to be funny. Or at least it would be funny if people weren’t going to take it seriously.

A group of scientists have published a paper purporting to prove that people who get vaccinated for COVID-19 have fewer car accidents than those who don’t. They don’t make the claim that this means vaccines reduce car accidents, although some jerk might make the argument just for fun. They make the claim that vaccine skeptics are less risk averse than vaccinators and hence jerks on the road.

Adults who neglect COVID-19 health recommendations may also neglect basic road safety, study finds #MedPub #MedTwitter #MedEd #MedNewshttps://t.co/fEeAGZ3w6R

— MedPub.io (@MedPubApp) December 12, 2022

As somebody who was unwillingly forced to take a statistical methods class in graduate school I immediately understood this to be a classic case of p-hacking. In my first (of two) methods class the professor showed us how it is done, and it was amusing.

If you take a data set of international scope you can easily find that there is a high degree of correlation between clean drinking water and literacy. You could plausibly argue from the statistics that one causes the other, when in fact there is another variable: countries with poor access to clean water are poor. Poor countries have low access to education. Education and literacy are correlated, and even causally related.

Yet the p-value for clean drinking water and literacy is very high. Only common sense prevents one from asserting a causal relation.

That is exactly what was done here, and it was published in a peer reviewed journal. This piece of junk.

Reasons underlying hesitancy to get vaccinated against COVID-19 may be associated with increased risks of traffic accidents according to a new study in The American Journal of Medicine. Researchers found that adults who neglect these health recommendations may also neglect basic road safety. They recommend that greater awareness might encourage more COVID-19 vaccination.

Motor vehicle traffic crashes are a common cause of sudden death, brain injury, spinal damage, skeletal fractures, chronic pain, and other disabling conditions. Traffic crash risks occur as a complication of several diseases including alcohol misuse, sleep apnea, and diabetes. However, the possible association between vaccine hesitancy and traffic crashes had not been previously studied.

“COVID-19 vaccination is an objective, available, important, authenticated, and timely indicator of human behavior—albeit in a domain separate from motor vehicle traffic,” explained lead investigator Donald A. Redelmeier, MD, Evaluative Clinical Sciences, Sunnybrook Research Institute; Department of Medicine, University of Toronto; Institute for Clinical Evaluative Sciences; and Division of General Internal Medicine, Sunnybrook Health Sciences Centre, Toronto, ON, Canada. …

The investigators conducted a population-based longitudinal cohort analysis of adults and determined COVID-19 vaccination status through linkages to individual electronic medical records. Traffic crashes requiring emergency medical care were subsequently identified by multicenter outcome ascertainment of 178 centers in the region over a one-month follow-up interval.

Over 11 million individuals were included, of whom 16% had not received a COVID-19 vaccine. The cohort accounted for 6,682 traffic crashes during follow-up. Unvaccinated individuals accounted for 1,682 traffic crashes (25%), equal to a 72% increased relative risk compared to those vaccinated. The increased risk was more than the risk associated with diabetes and similar to the relative risk associated with sleep apnea.

The increased traffic risks among unvaccinated adults extended to diverse subgroups (older & younger; drivers & pedestrians; rich & poor) and was equal to a 48% increase after adjustment for age, sex, home location, socioeconomic status, and medical diagnoses. The increased traffic risks extended across the entire spectrum of crash severity and appeared similar for Pfizer, Moderna, or other vaccines. The increased risks collectively amounted to 704 extra traffic crashes.

Another factor: a 48% increased risk sounds big, but that raises the risk from around 2% to 3%. That is actually a very small number and you would have to conduct this analysis over multiple years to tease out whether this is a statistical blip or a real phenomenon. Blips happen all the time, which is why studies can be notoriously difficult to duplicate. Often studies make dramatic claims based upon non-replicable studies, which has caused a crisis in social science research.

Sometimes non-replicability comes from p-hacking, sometimes from blips that turn out to be insignificant. It is like bad polls.

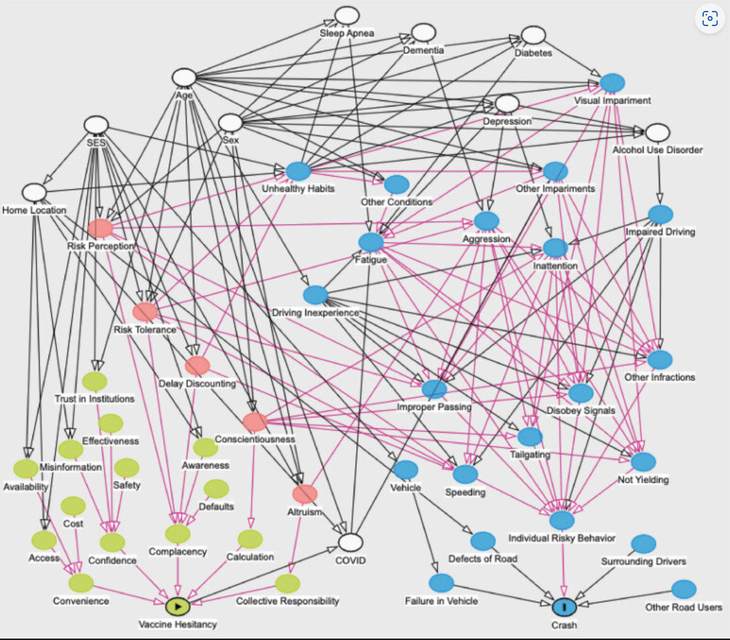

You can even see a visual representation of p-hacking provided by the researchers, who believe it shows the causal links. Instead it shows them trolling for results.

This is a nice, graphical representation of BS, not a carefully constructed, detailed scientific reconstruction of a real phenomenon.

Now just because there is obviously p-hacking going on here doesn’t mean that there is no possible relationship between the two things: vaccine hesitancy and driving behavior might plausibly be correlated. But there are likely so many other confounding factors (where people live would be an obvious one, if vaccine hesitancy in non-randomly distributed geographically, for instance) that you would have to very carefully construct a dataset, and even then the effect would have to be huge to be plausible.

P-hacking is one of the many reasons why you should be very skeptical of dramatic scientific claims, especially in social science. The incentives in science skew very heavily to finding results that stand out, so scientists find results that stand out. That, my friend, is economics. You get more of what you pay for.

This doesn’t mean science is bunk. It just means there is a lot of bunk that passes for science.

Welcome to real life.

Join the conversation as a VIP Member