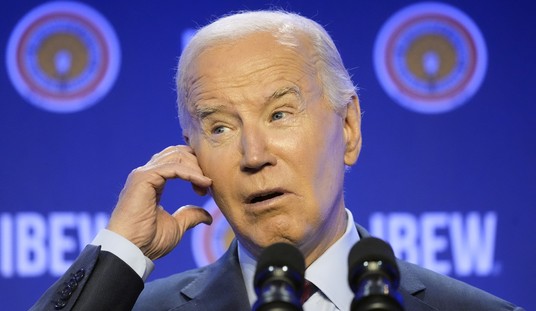

There is still an ongoing debate in the United States regarding if and how we should regulate Artificial Intelligence as the technology continues to advance and infiltrate every aspect of our online lives. But the European Union isn’t waiting around for the robots to arrive and unseat their leaders. The European Parliament approved new legislation this week intended to rein in the technology with an eye toward preventing job losses for humans and suppressing the spread of misinformation and bias, particularly in matters that could impact elections. But at least based on the general description they are providing, it’s unclear how such a law could be successfully implemented and enforced. (CNBC)

The European Parliament has approved the bloc’s landmark rules for artificial intelligence, known as the EU AI Act, clearing a key hurdle for the first formal regulation of AI in the West to become law.

The rules are the first comprehensive regulations for AI, which has become a key battleground in the global tech industry, as companies compete for a leading role in developing the technology — particularly generative AI, which can generate new content from user prompts.

What generative AI is capable of, from producing music lyrics to generating code, has wowed academics, businesspeople, and even school students. But it has also led to worries around job displacement, misinformation, and bias.

We should note that the vote held yesterday did not result in the new rules becoming law. This is only the first step of a longer process. But given the overwhelming nature of the vote, it will very likely come to pass eventually. The measure received 499 votes in favor with 28 voting no and 93 abstentions.

If adopted, the rules would apply to all developers of systems such as ChatGPT and other generative AI products. Developers would be required to submit their designs to the EU for review prior to releasing them commercially. There would also be a ban on real-time biometric identification systems as well as “social scoring” systems.

Most of that sounds okay to a certain extent, but some of the language sounds rather vague. This is potentially an issue that we’ll run into in the United States and elsewhere. It sounds as if we have people crafting regulations governing the deployment of Artificial Intelligence who don’t really know very much about how the technology was developed or what the underlying challenges and concerns may be.

Implementing and enforcing the law in the way it’s being described also looks to be problematic. They want to prevent the elimination of jobs, which seems like a noble goal. But what will they say to companies who find a way to operate more efficiently using AI tools and no longer require as many workers? Amazon has already been cutting many jobs and replacing warehouse workers with robots and nobody stopped them.

Also, detecting the use of AI will not always be simple because it’s generally not the companies who develop these products that are using them in real-world scenarios. (With ChatGPT being the exception to the rule.) If there is a search engine or a company’s customer service division that purchases a license for one of these programs and incorporates it into its existing systems, how would you know? And how could you stop them in this interconnected world?

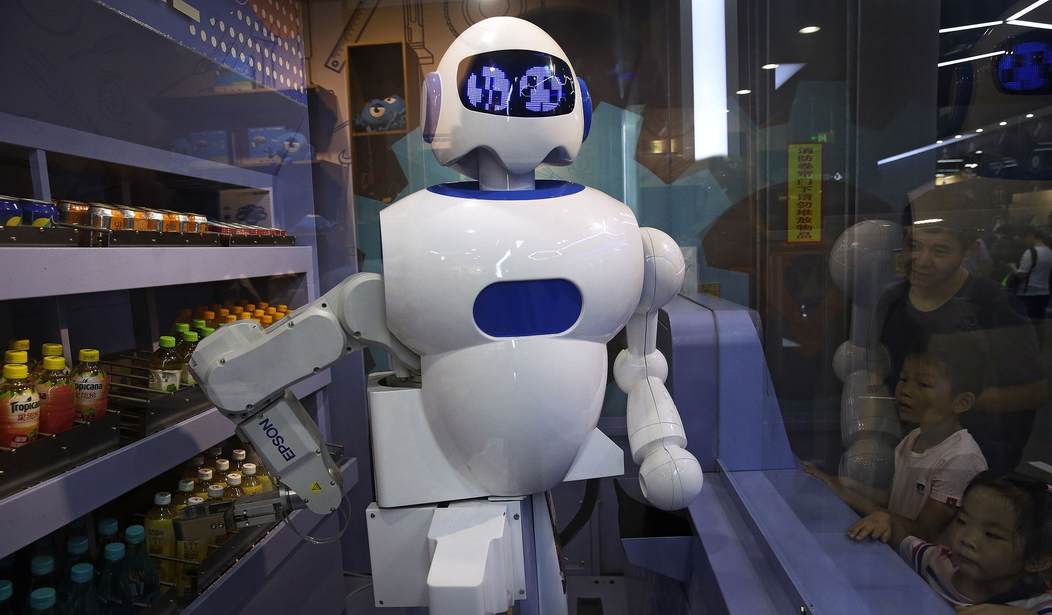

AI is already showing up in some unexpected places and as the next generations of the technology roll out, it will likely only become more common. And the better it gets, the harder it will be to detect, so attempts to regulate it will face significant challenges. Or at least until the killer robots show up, anyway.

Join the conversation as a VIP Member